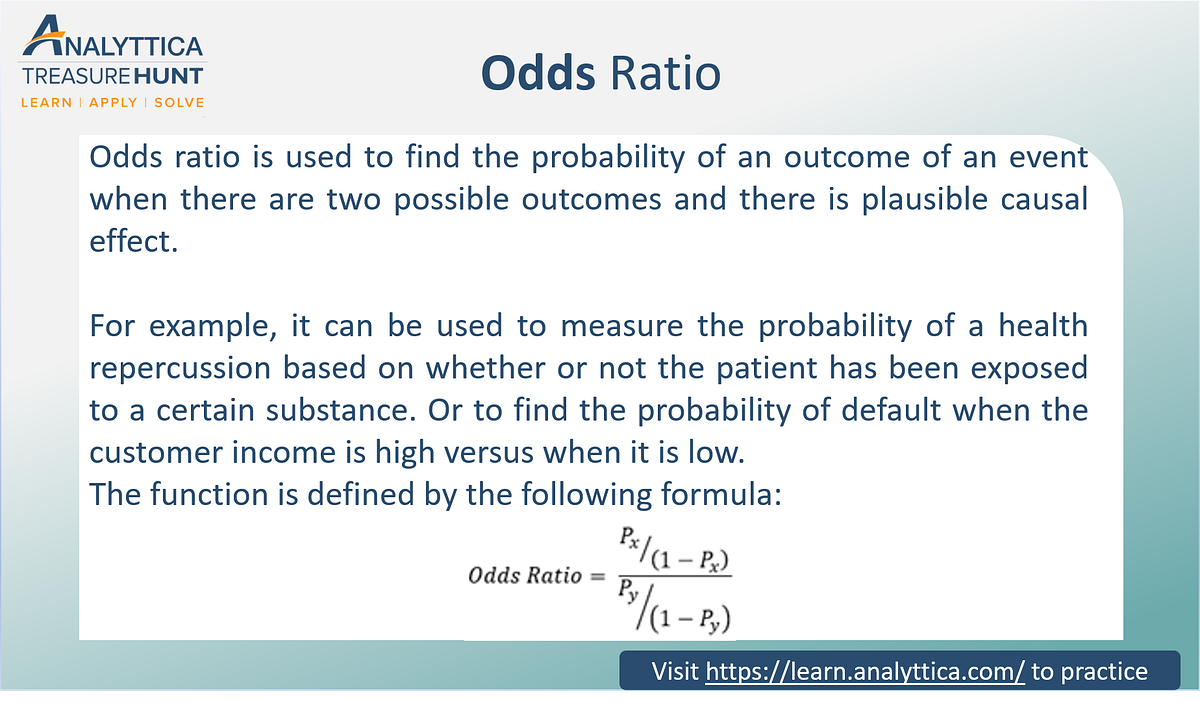

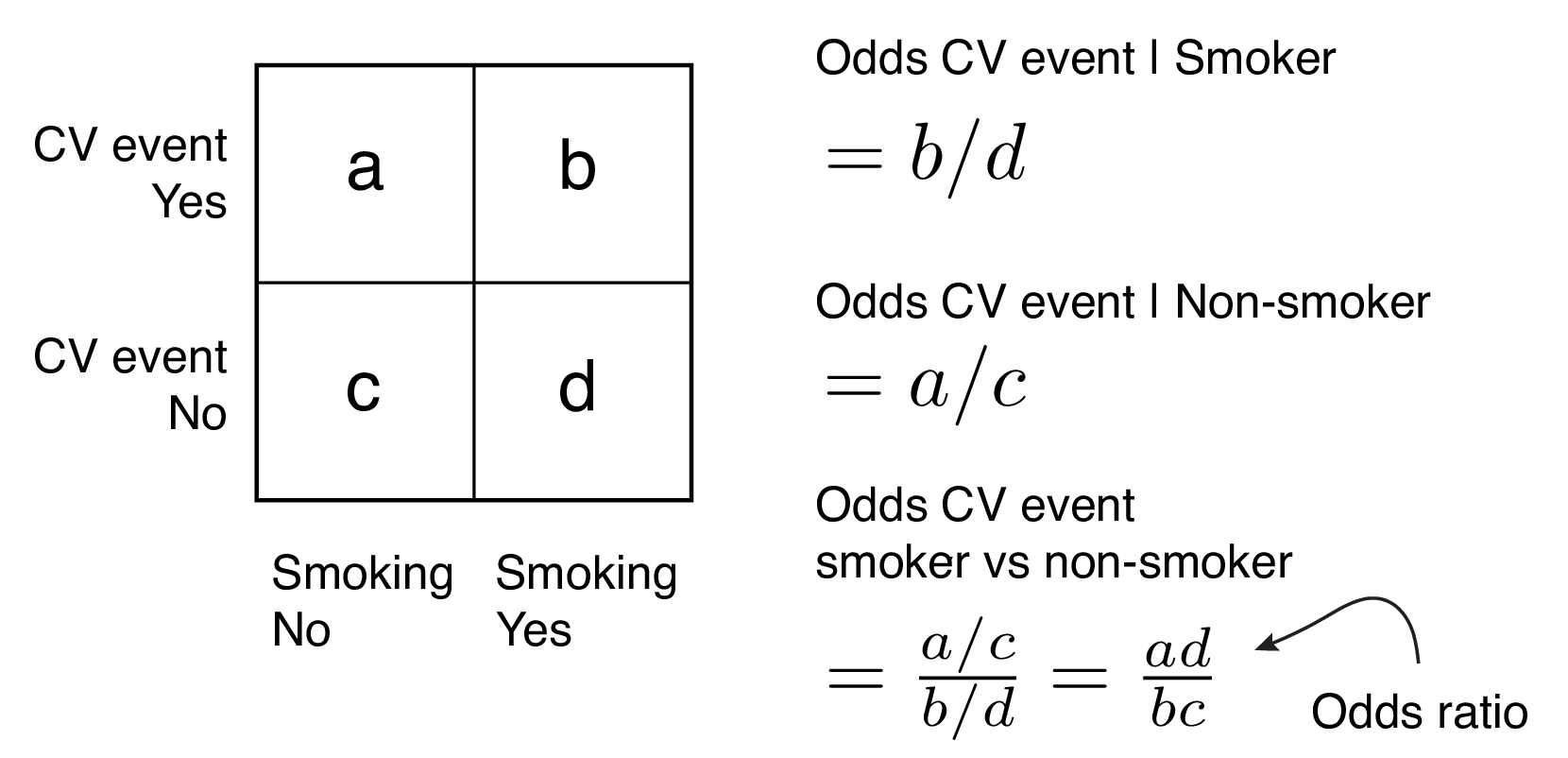

In a logistic regression model, odds ratio provide a more coherent solution as compared to probabilities Odds ratio represent the constant effect of an independent variable on a dependent variable Here, being constant means that this ratio does not change with a change in the independent (predictor) variableHi Arvind, Thanks for A to A In general with any algorithm, coefficient getting assigned to a variable denotes the significance of that particular variable High coefficient value means the variable is playing a major role in deciding the boundarIn my practical experience unadjusted odds ratio obtained in logistic regression and the odds ratio calculated from 2x2 table are almost same Cite 9th Apr, 15 Tyler Williamson

1

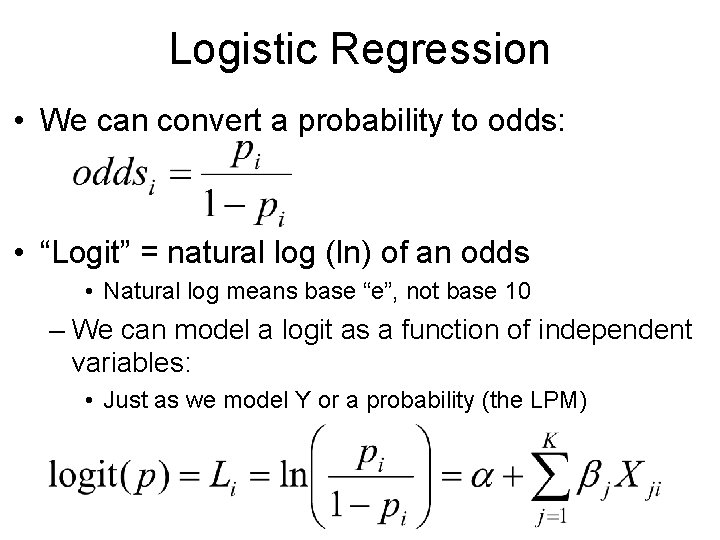

Odds vs probability logistic regression

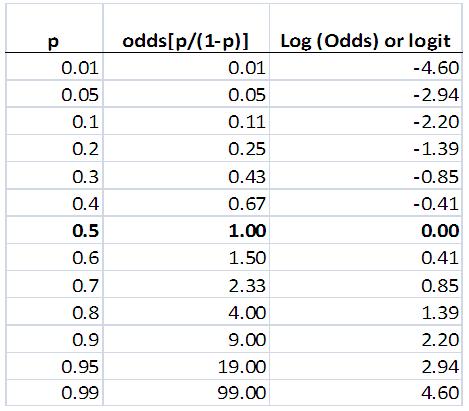

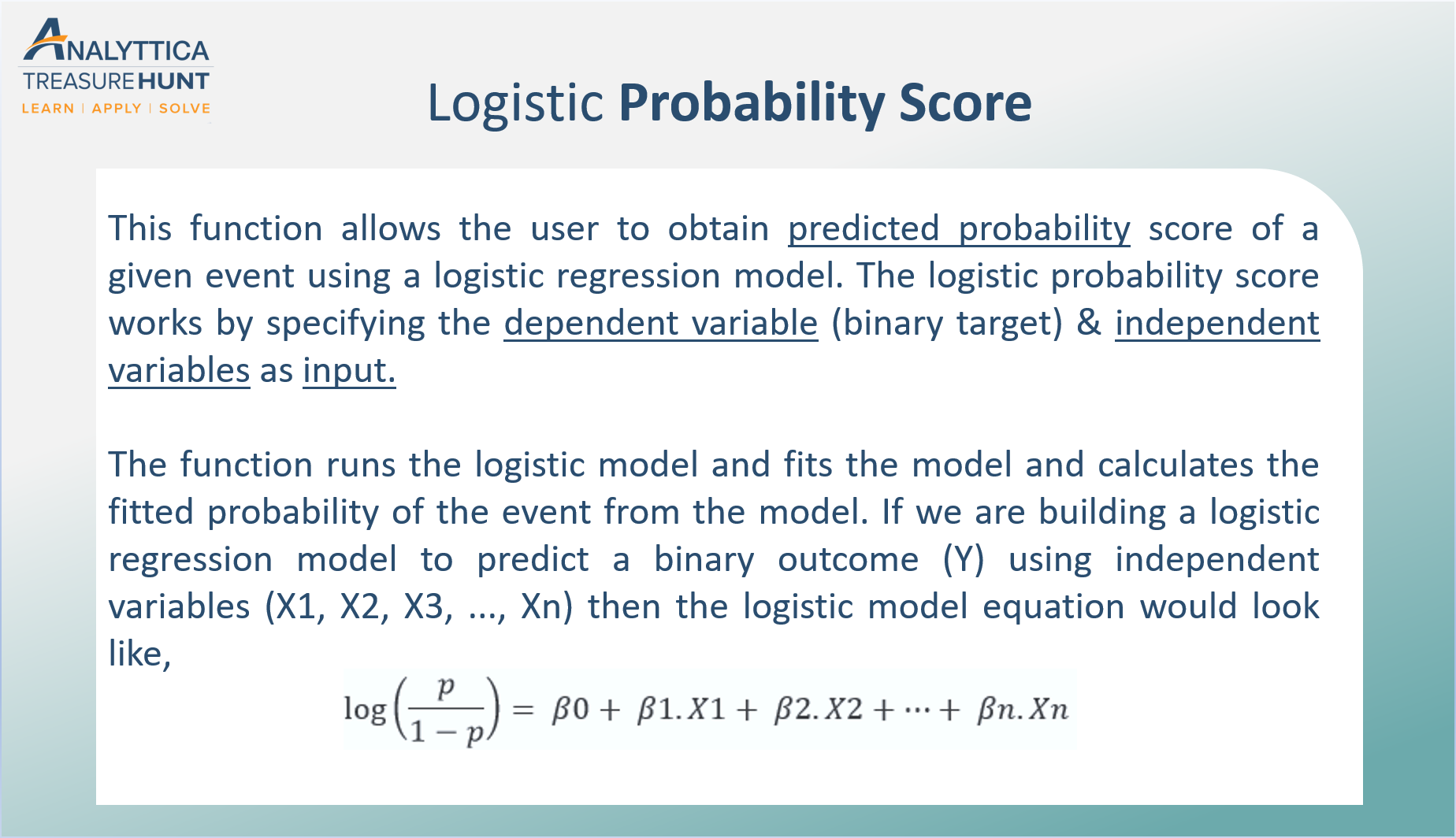

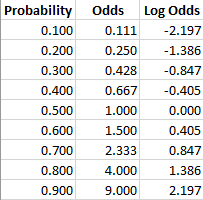

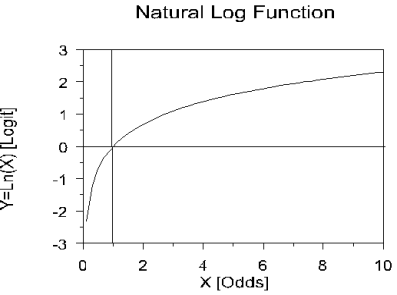

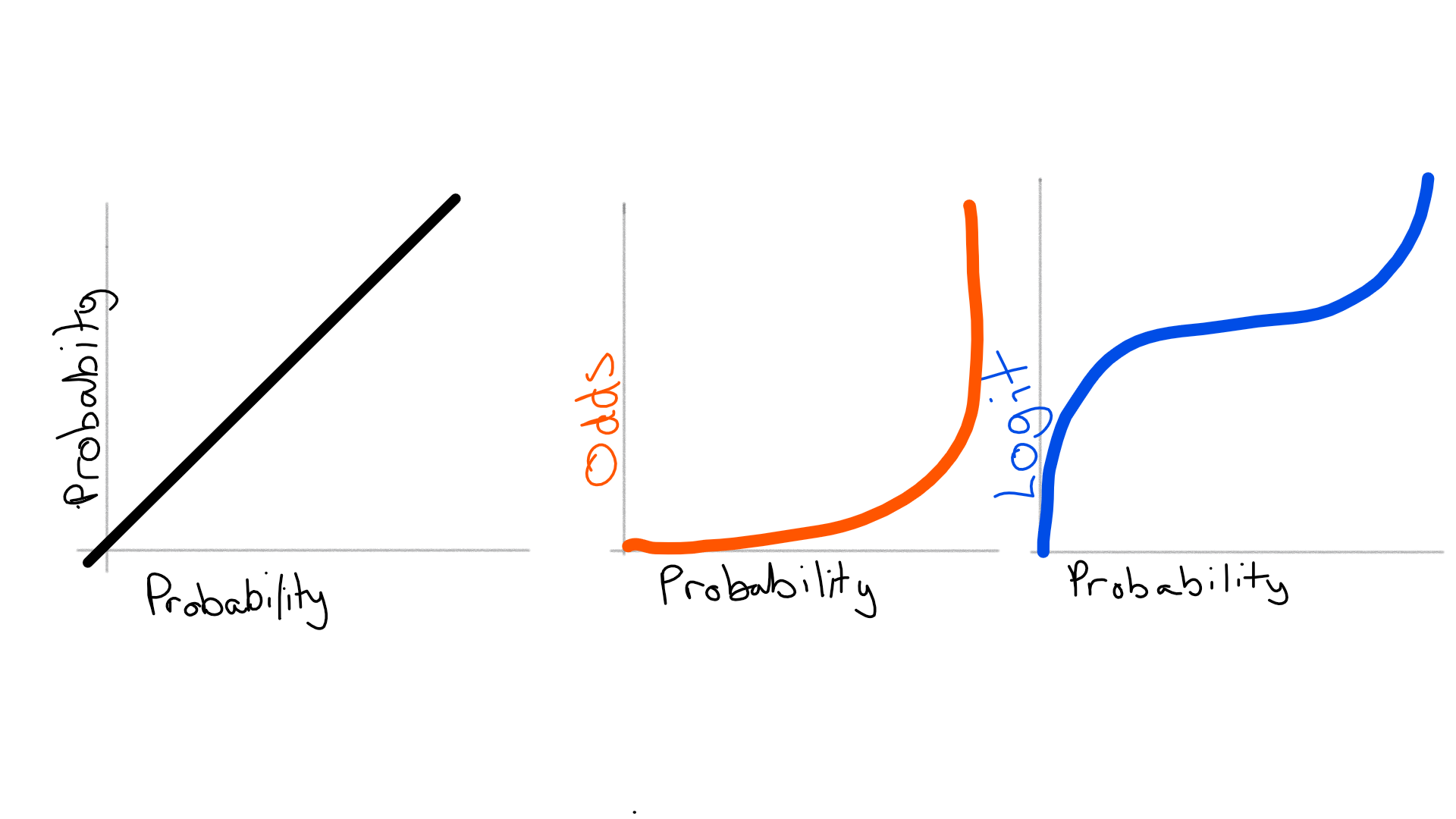

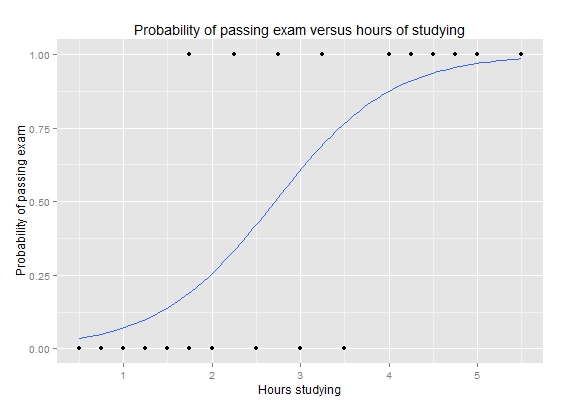

Odds vs probability logistic regression-The survival probability is if Pclass were zero (intercept) However, you cannot just add the probability of, say Pclass == 1 to survival probability of PClass == 0 to get the survival chance of 1st class passengers Instead, consider that the logistic regression can be interpreted as a normal regression as long as you use logitsThus, using log odds is slightly more advantageous over probability Before getting into the details of logistic regression, let us briefly understand what odds are Odds Simply put, odds are the chances of success divided by the chances of

Faq How Do I Interpret Odds Ratios In Logistic Regression

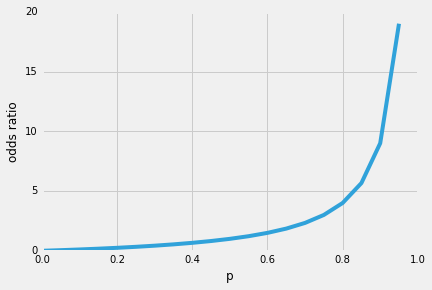

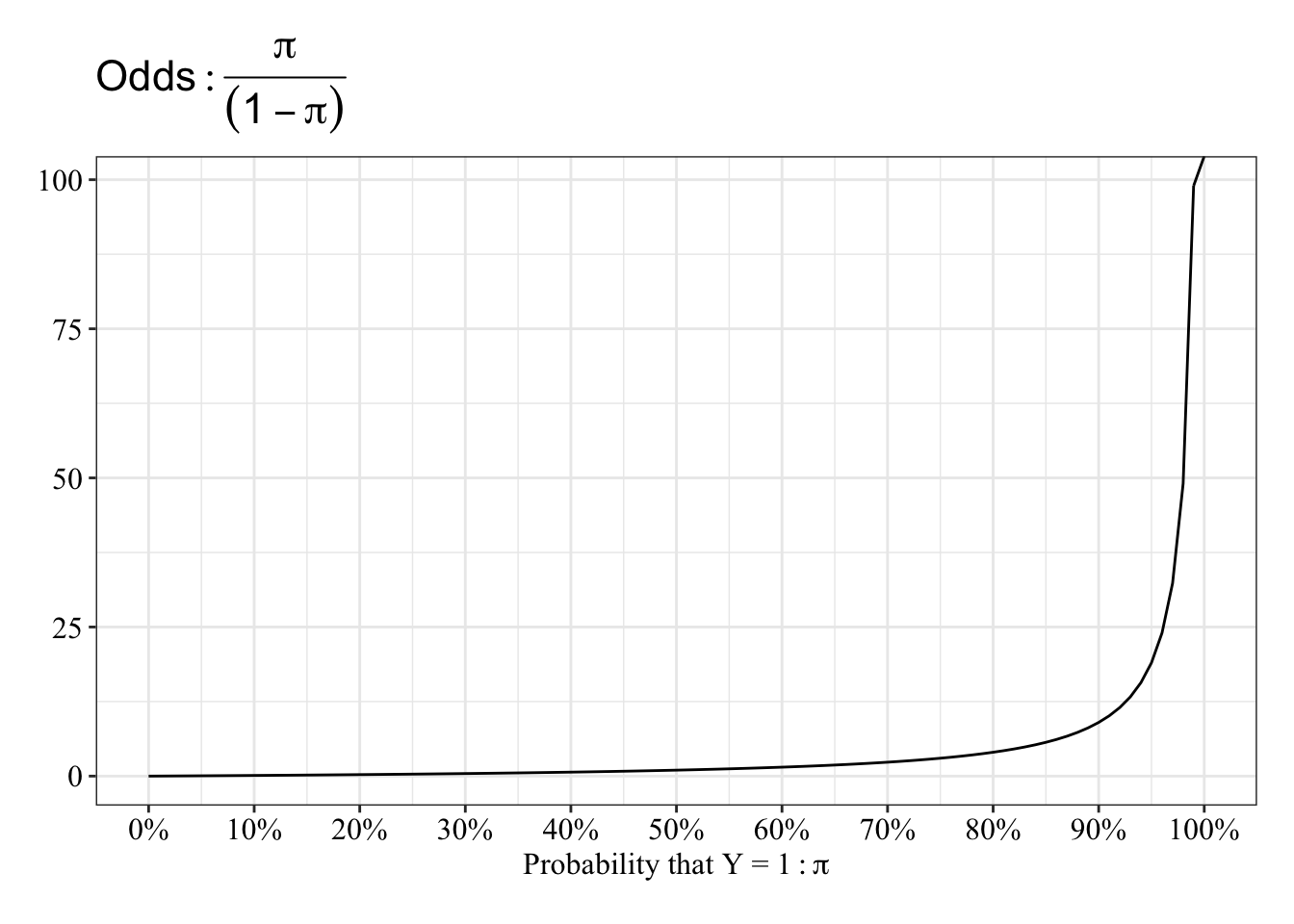

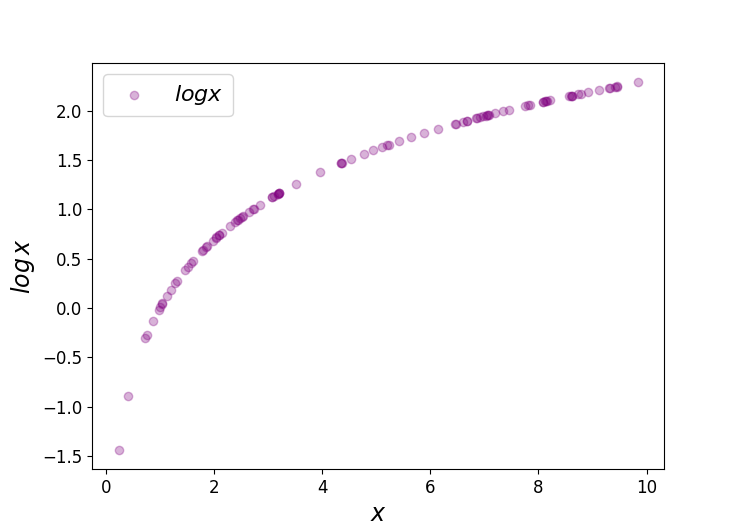

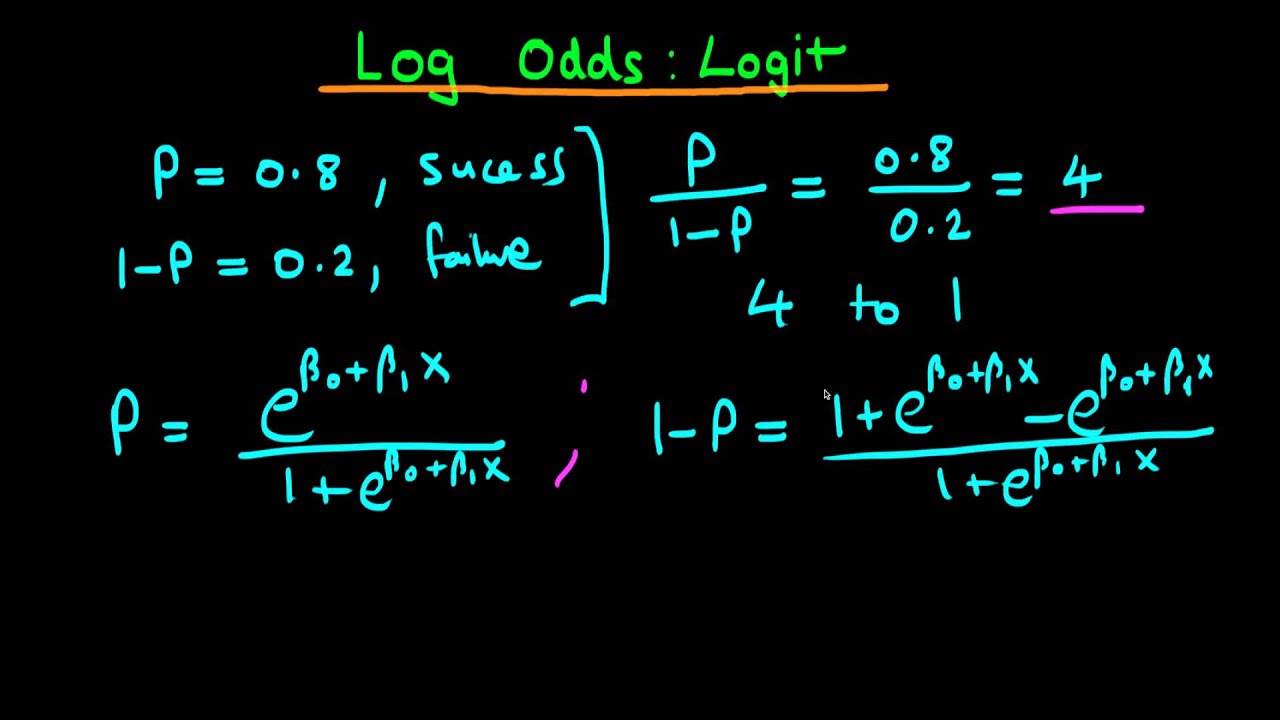

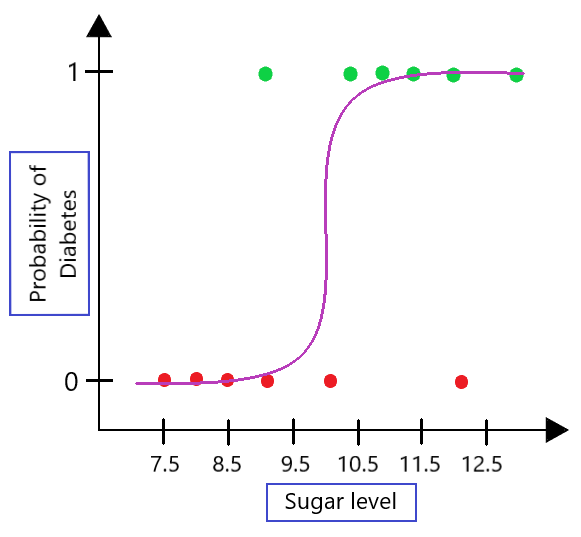

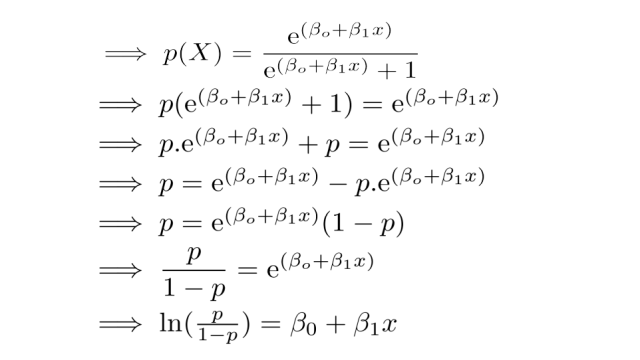

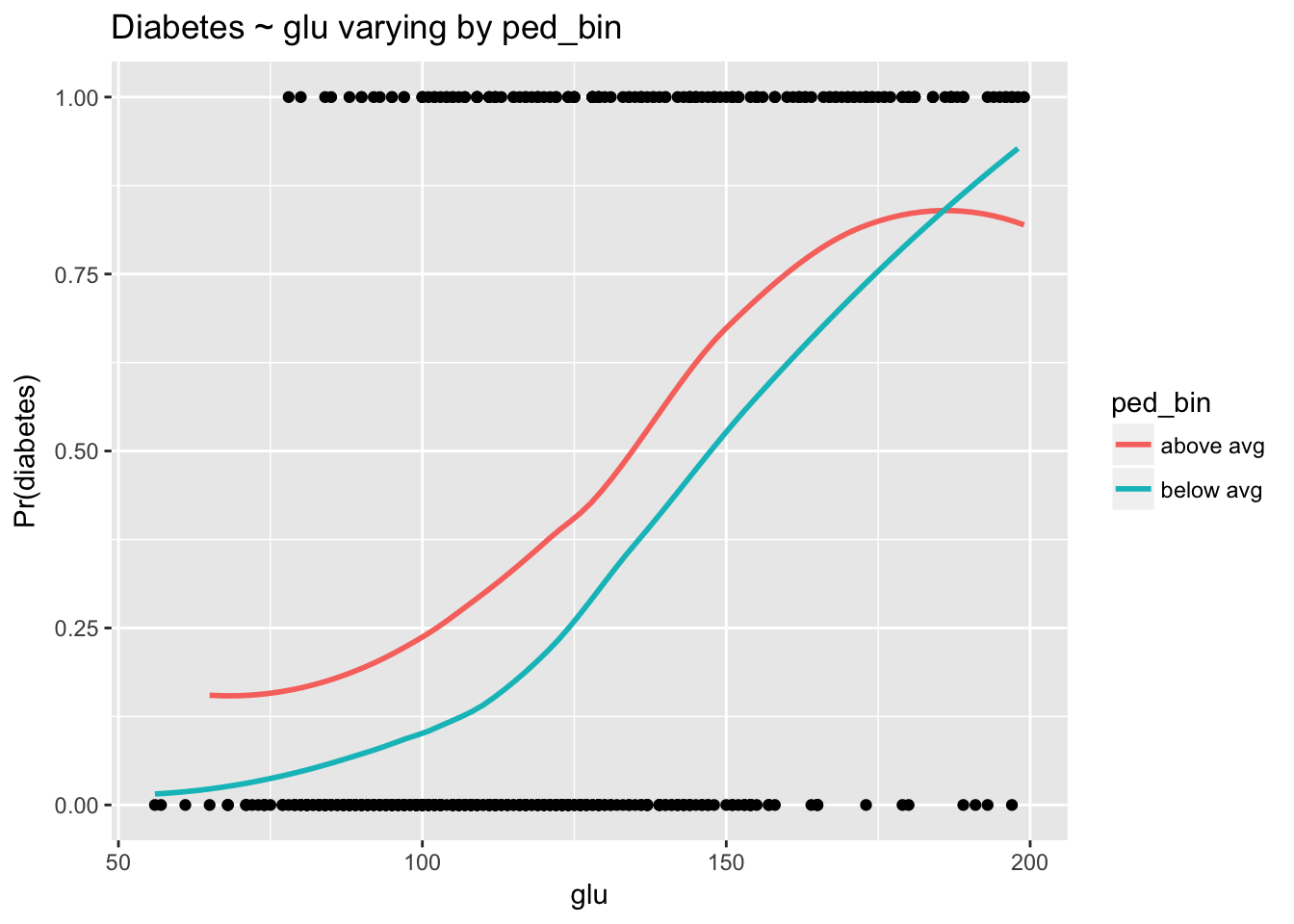

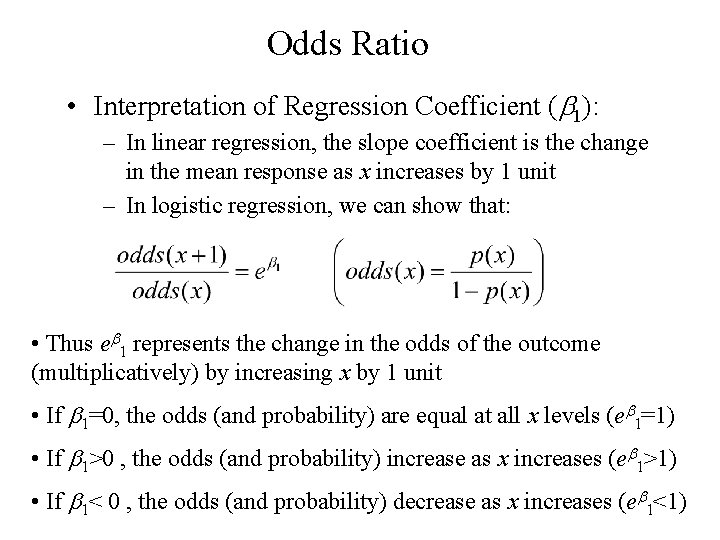

Next, discuss Odds and Log Odds Odds The relationship between x and probability is not very intuitive Let's modify the above equation to find an intuitive equation Step1 Calculate the probability of not having blood sugar Step2 Where p = probability of having diabetes 1p = probability of not having diabetes You can interpret odd like belowThis video explains how the linear combination of the regression coefficients and the independent variables can be interpreted as representing the 'log odds'Logistic Regression and Odds Ratio A Chang 1 Odds Ratio Review Let p1 be the probability of success in row 1 (probability of Brain Tumor in row 1) 1 − p1 is the probability of not success in row 1 (probability of no Brain Tumor in row 1) Odd of getting disease for the people who were exposed to the risk factor (pˆ1 is an estimate of p1) O =

In video two we review / introduce the concepts of basic probability, odds, and the odds ratio and then apply them to a quick logistic regression example UnRepeat the above procedure for each explanatory variable PROC LOGISTIC tests the proportional odds assumption and gives the corresponding chisquare pvalue If the pvalue is significant, the proportional odds assumption is violated and a traditional cumulative logistic regression should not be run When checking assumptions, it is better toI see a lot of researchers get stuck when learning logistic regression because they are not used to thinking of likelihood on an odds scale Equal odds are 1 1 success for every 1 failure 11 Equal probabilities are 5 1 success for every 2 trials Odds can range from 0 to infinity Odds greater than 1 indicates success is more likely than failure

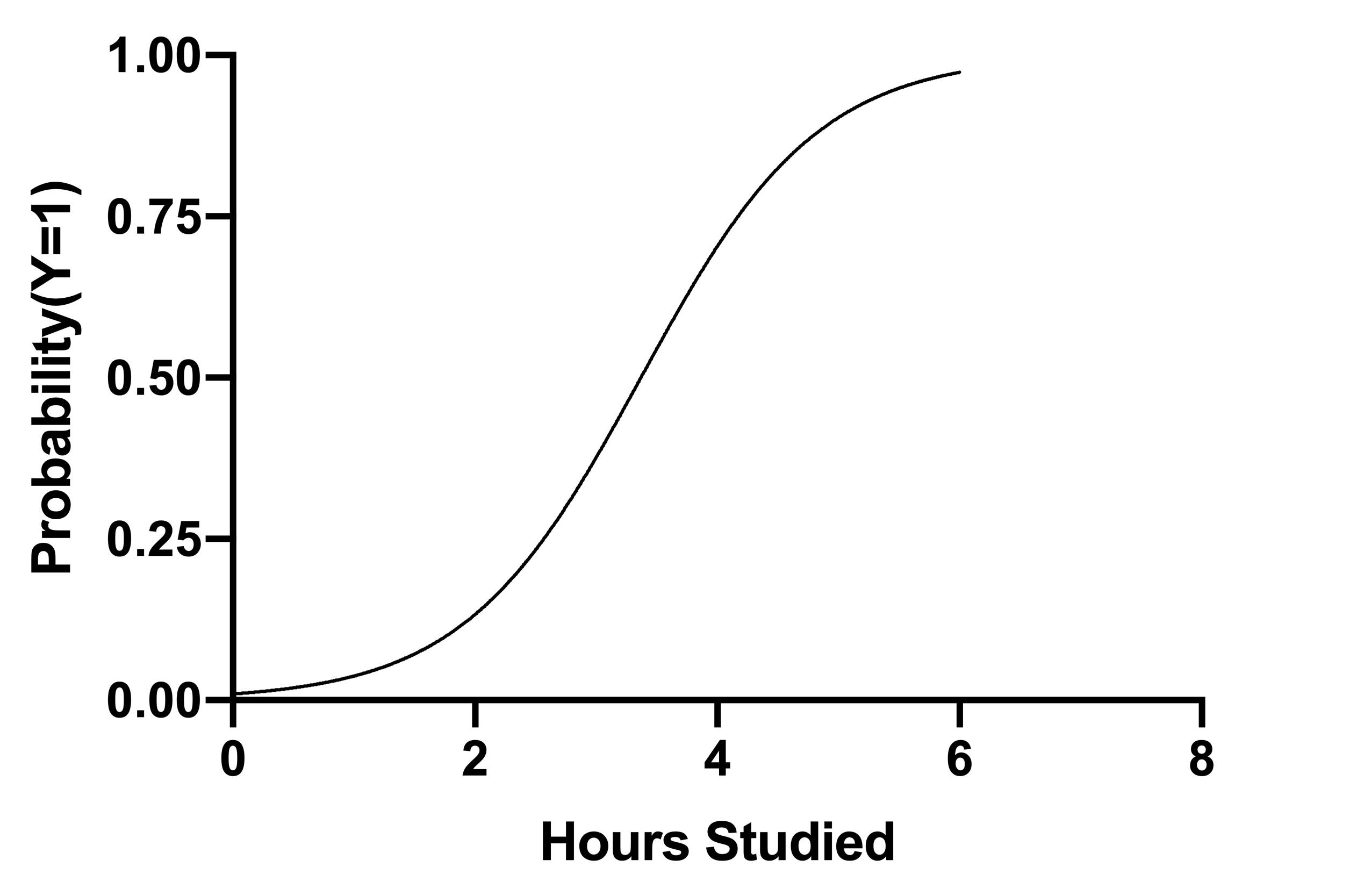

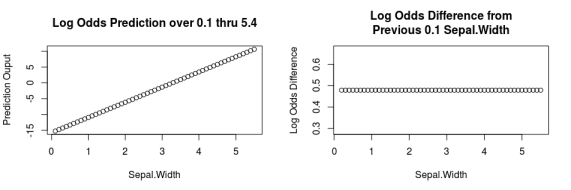

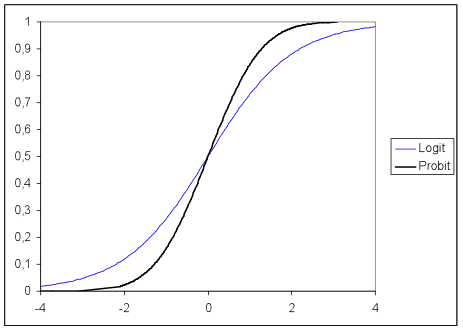

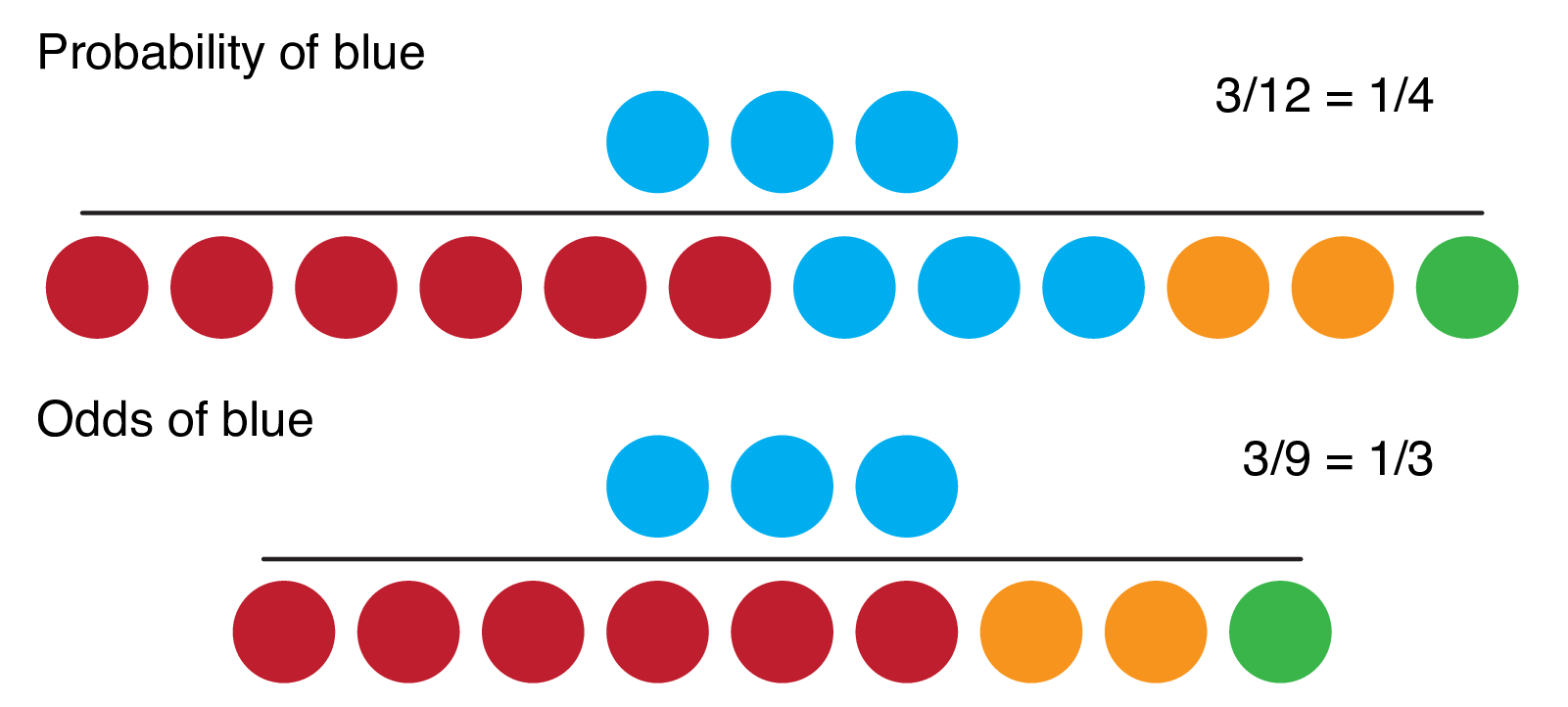

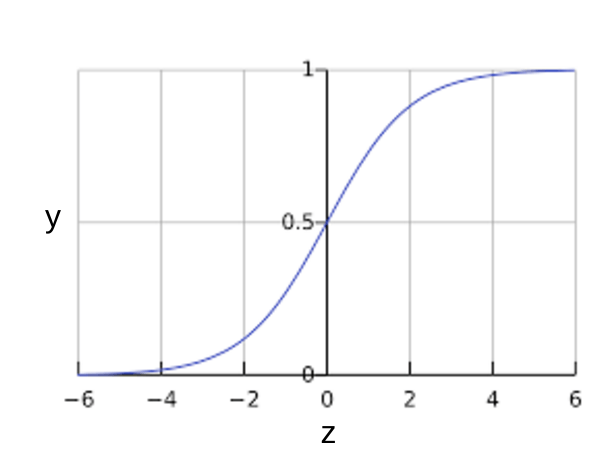

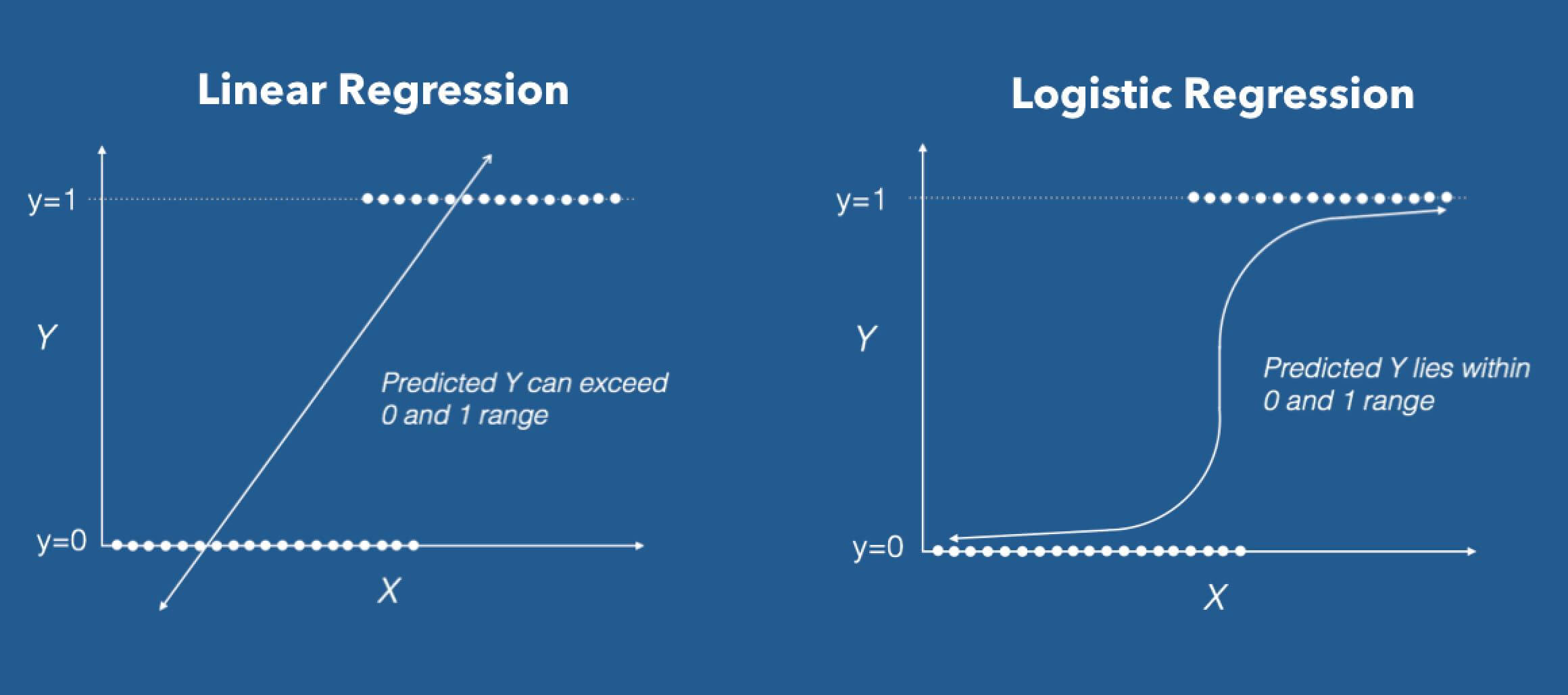

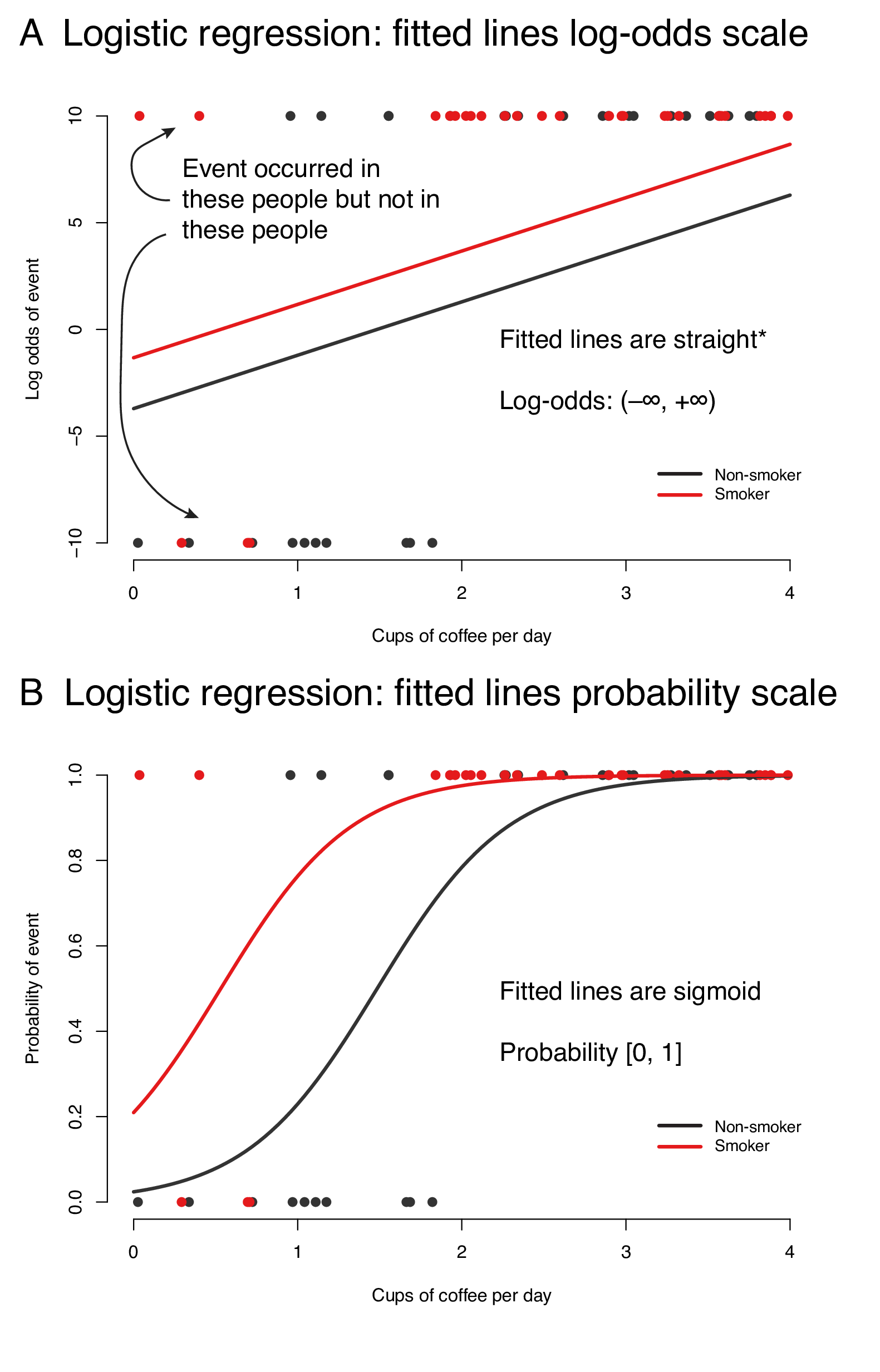

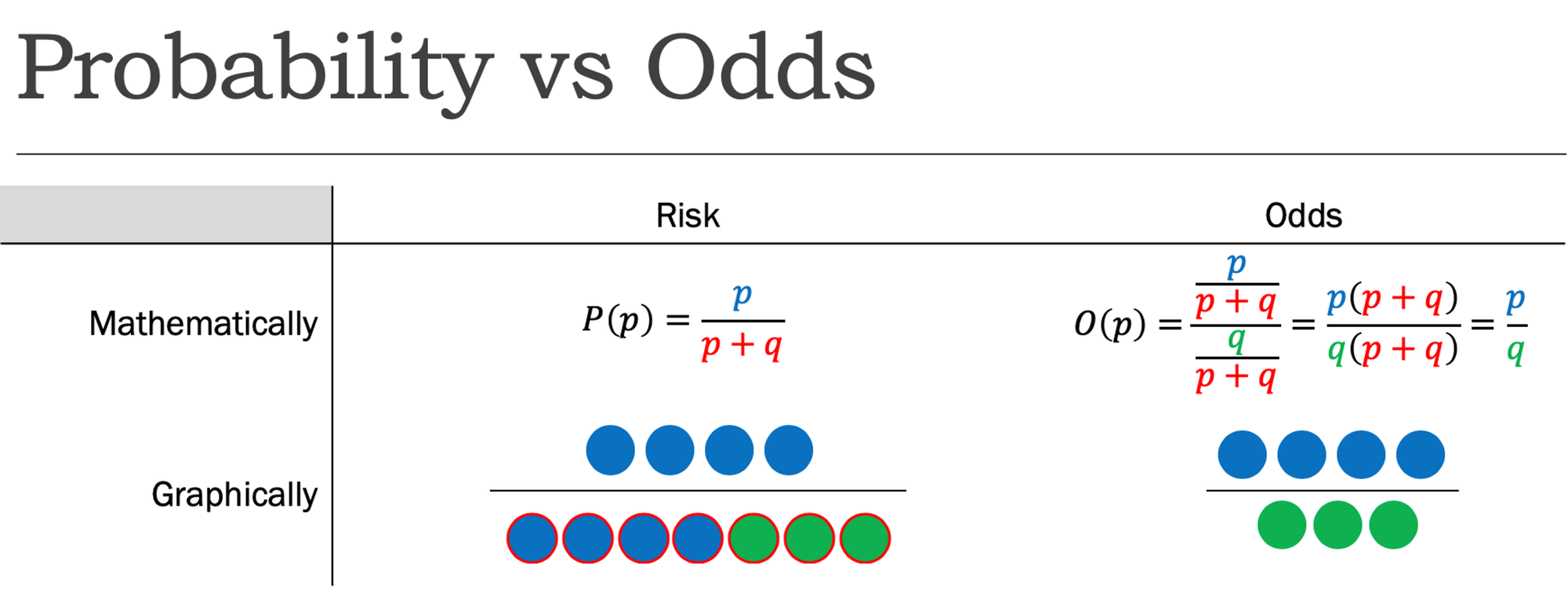

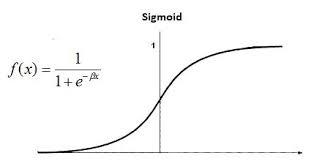

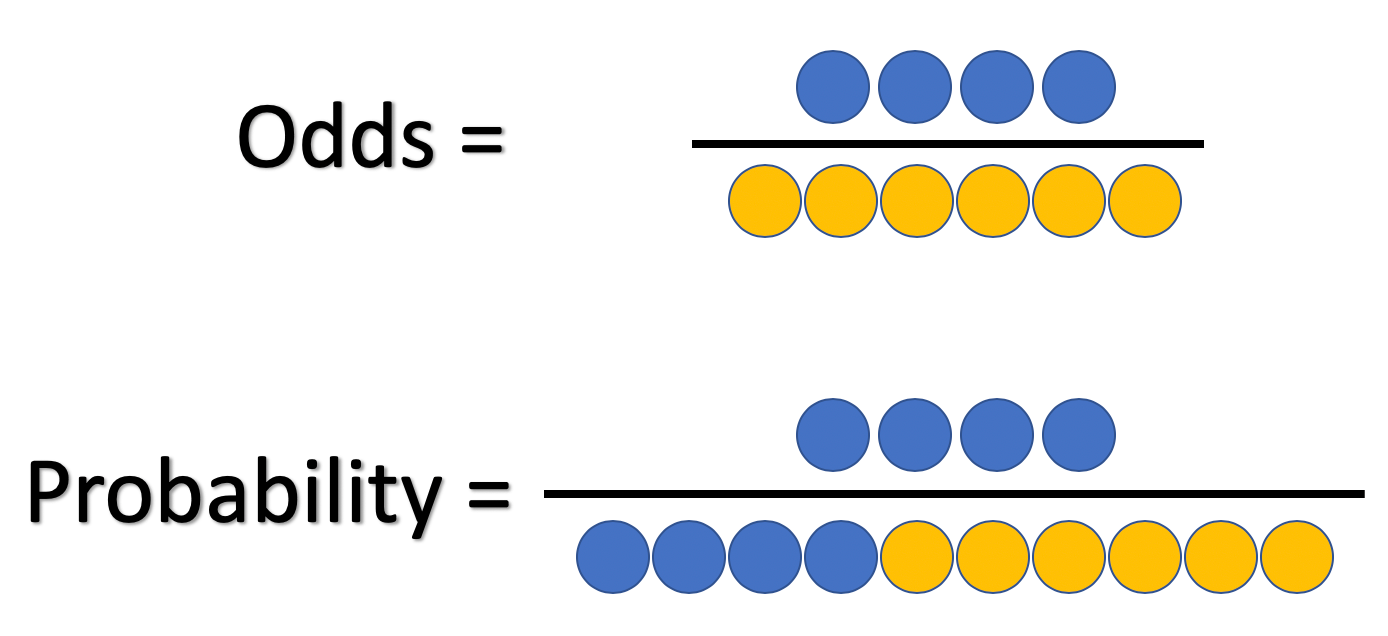

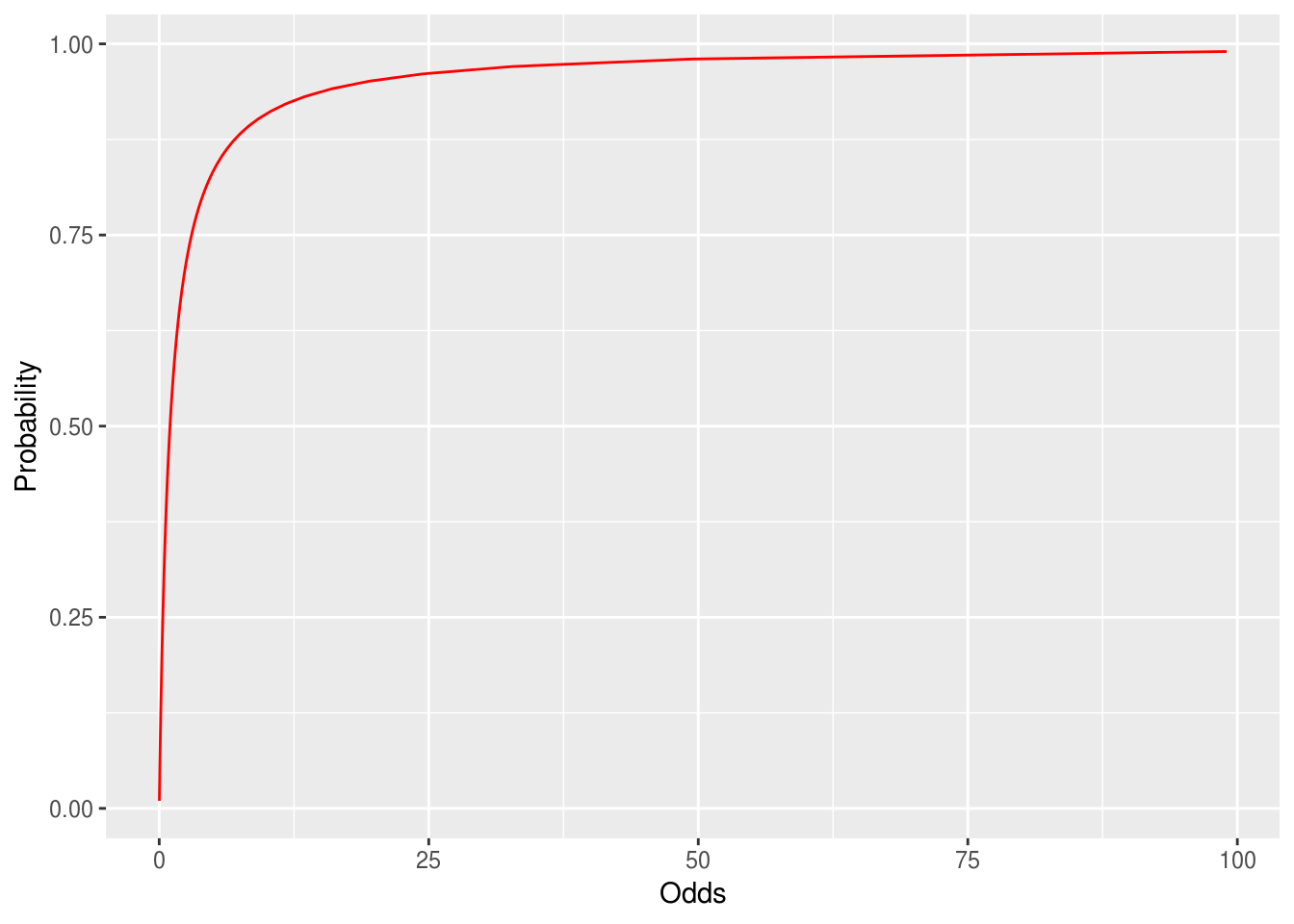

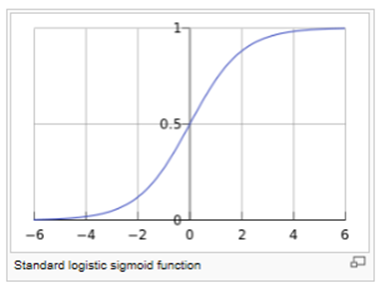

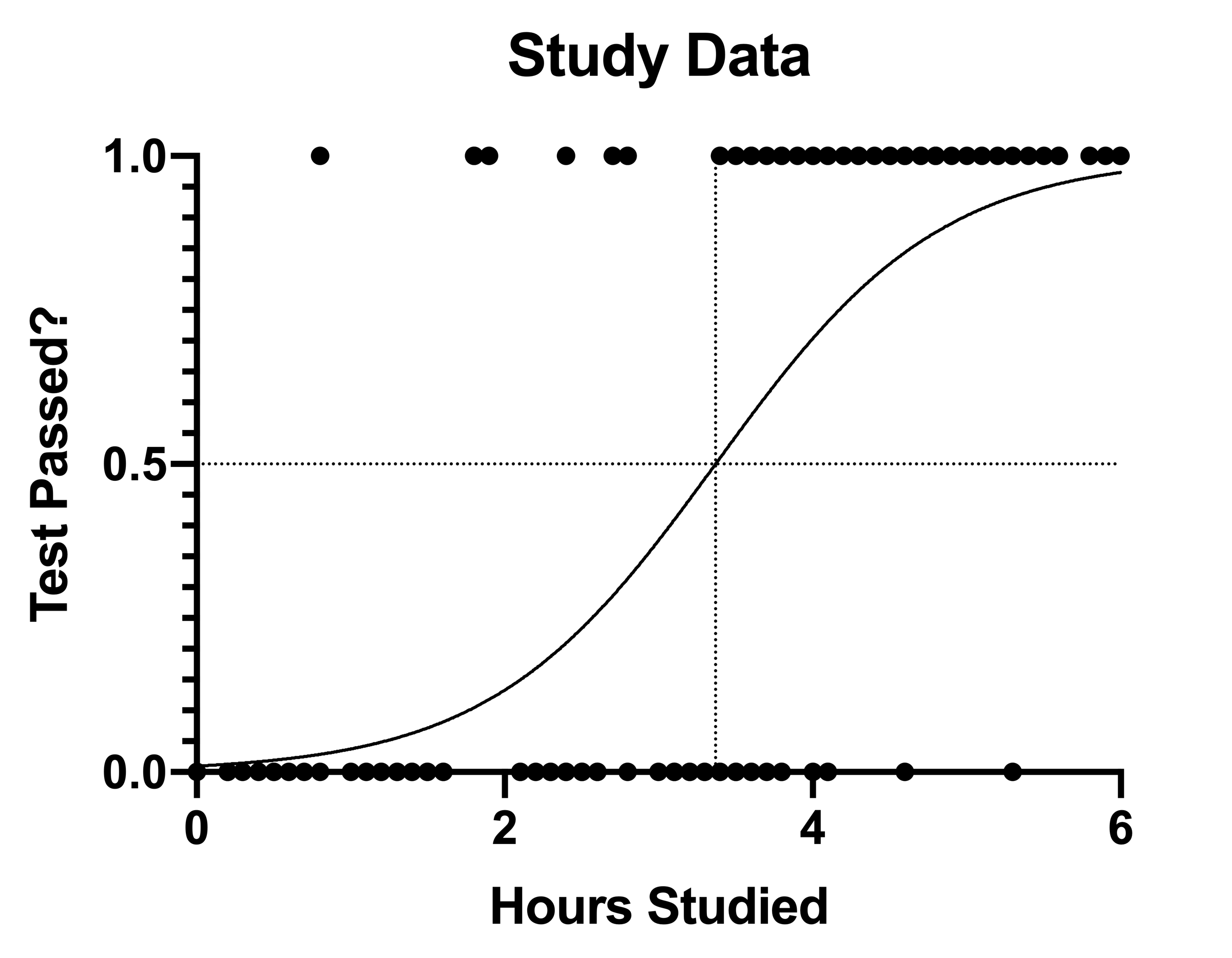

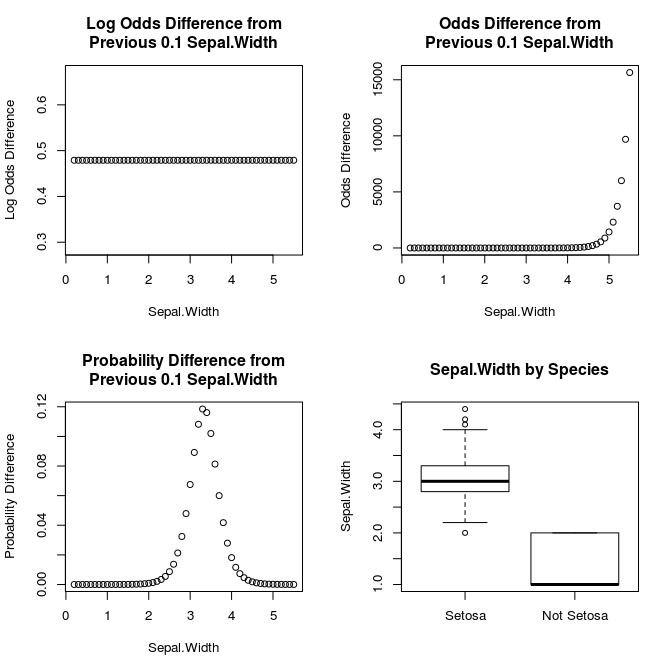

The problem is that probability and odds have different properties that give odds some advantages in statistics For example, in logistic regression the odds ratio represents the constant effect of a predictor X, on the likelihood that one outcome will occur The key phrase here is constant effect In regression models, we often want a measure of the unique effect of each XThe logistic regression function 𝑝 (𝐱) is the sigmoid function of 𝑓 (𝐱) 𝑝 (𝐱) = 1 / (1 exp (−𝑓 (𝐱)) As such, it's often close to either 0 or 1 The function 𝑝 (𝐱) is often interpreted as the predicted probability that the output for a given 𝐱 is equal to 1The probability that an event will occur is the fraction of times you expect to see that event in many trials Probabilities always range between 0 and 1 The odds are defined as the probability that the event will occur divided by the probability that the event will not occur If the probability of an event occurring is Y, then the probability of the event not occurring is 1Y

Logistic Regression Why Sigmoid Function

Logistic Regression A Concise Technical Overview Kdnuggets

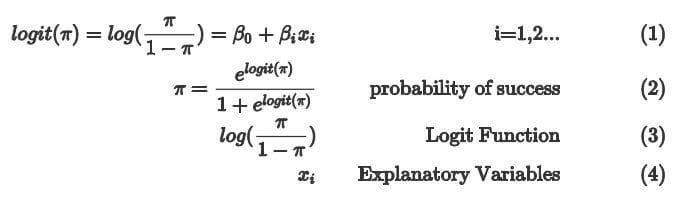

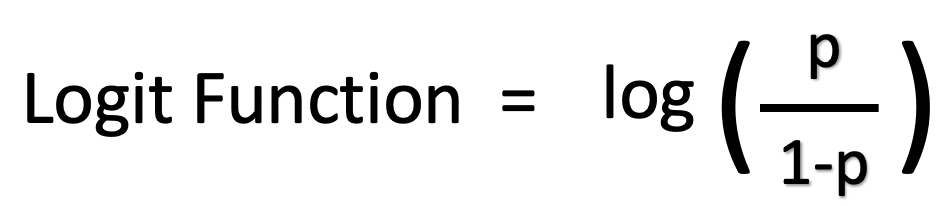

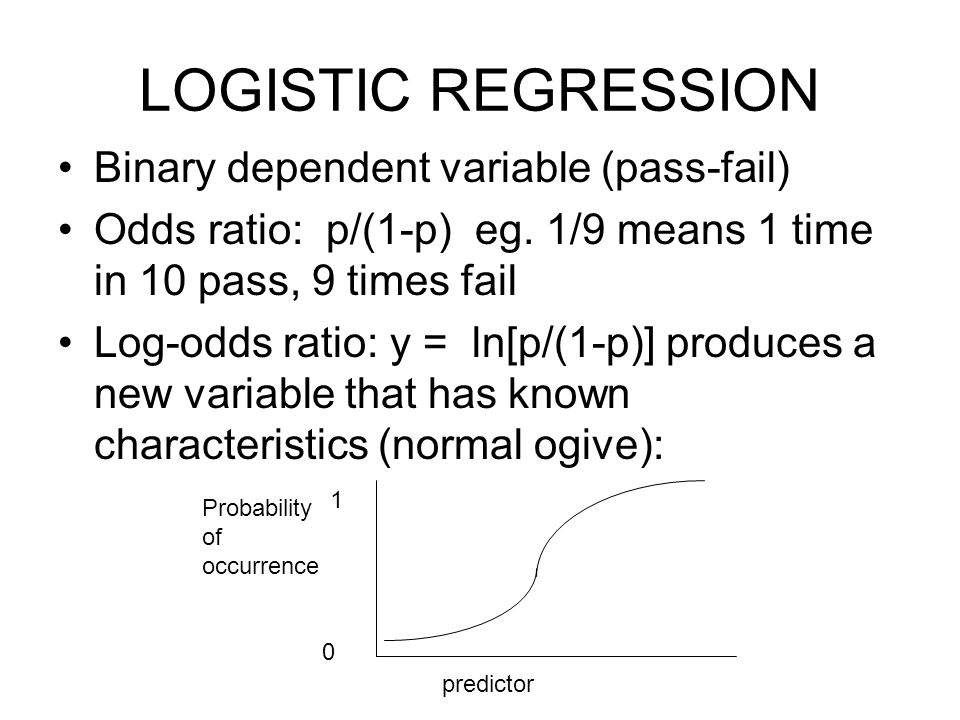

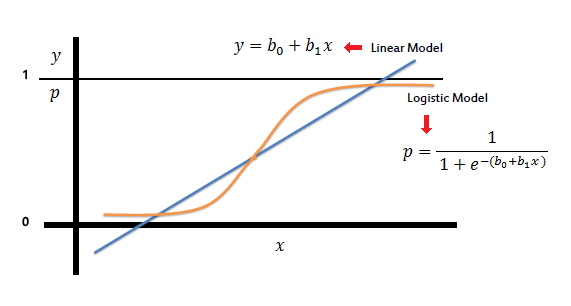

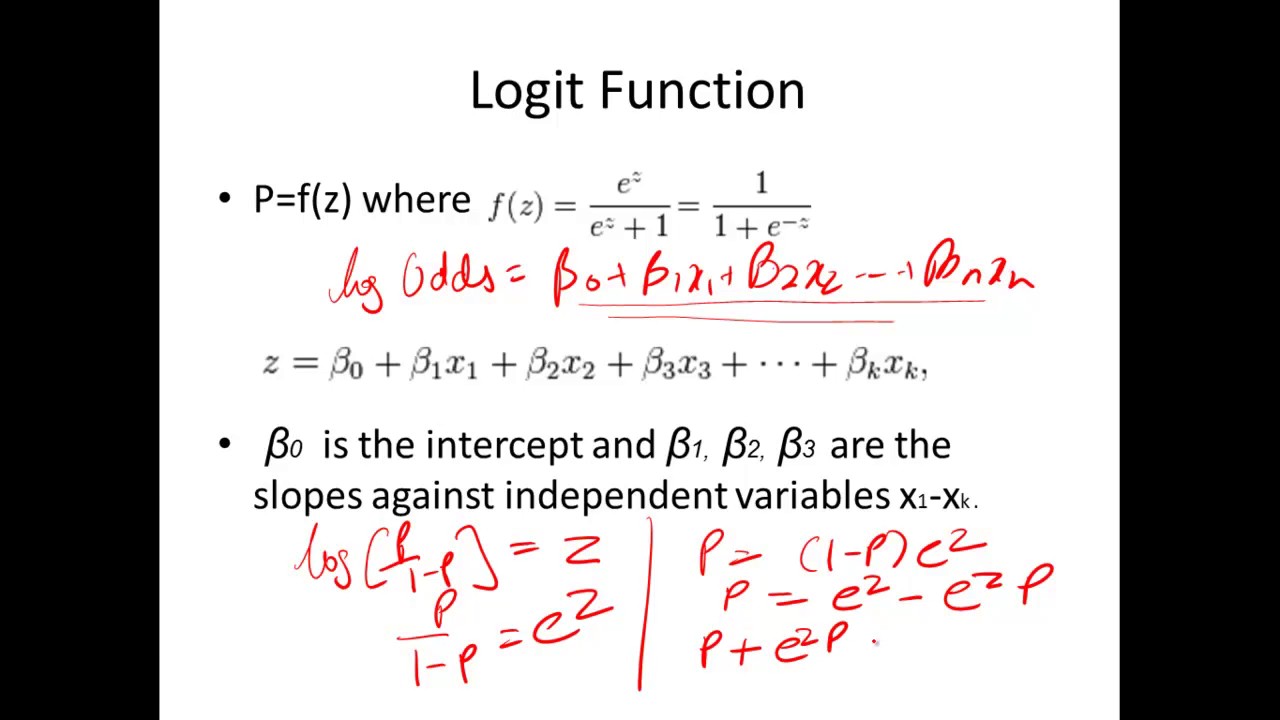

Interval or ratio in scale) Multinomial logistic regression is a simple extension of binary logistic regression that allows for more than two categories of the dependent or outcome variable Like binary logistic regression, multinomial logistic regression uses maximum likelihood estimation to evaluate the probability of categorical membershipIf z represents the output of the linear layer of a model trained with logistic regression, then s i g m o i d ( z) will yield a value (a probability) between 0 and 1 In mathematical terms y ′ = 1 1 e − z where y ′ is the output of the logistic regression model for a particular example z = b w 1 x 1 w 2 x 2 w N x NIntroduction to Binary Logistic Regression 3 Introduction to the mathematics of logistic regression Logistic regression forms this model by creating a new dependent variable, the logit(P) If P is the probability of a 1 at for given value of X, the odds of a 1 vs a 0 at any value for X are P/(1P) The logit(P)

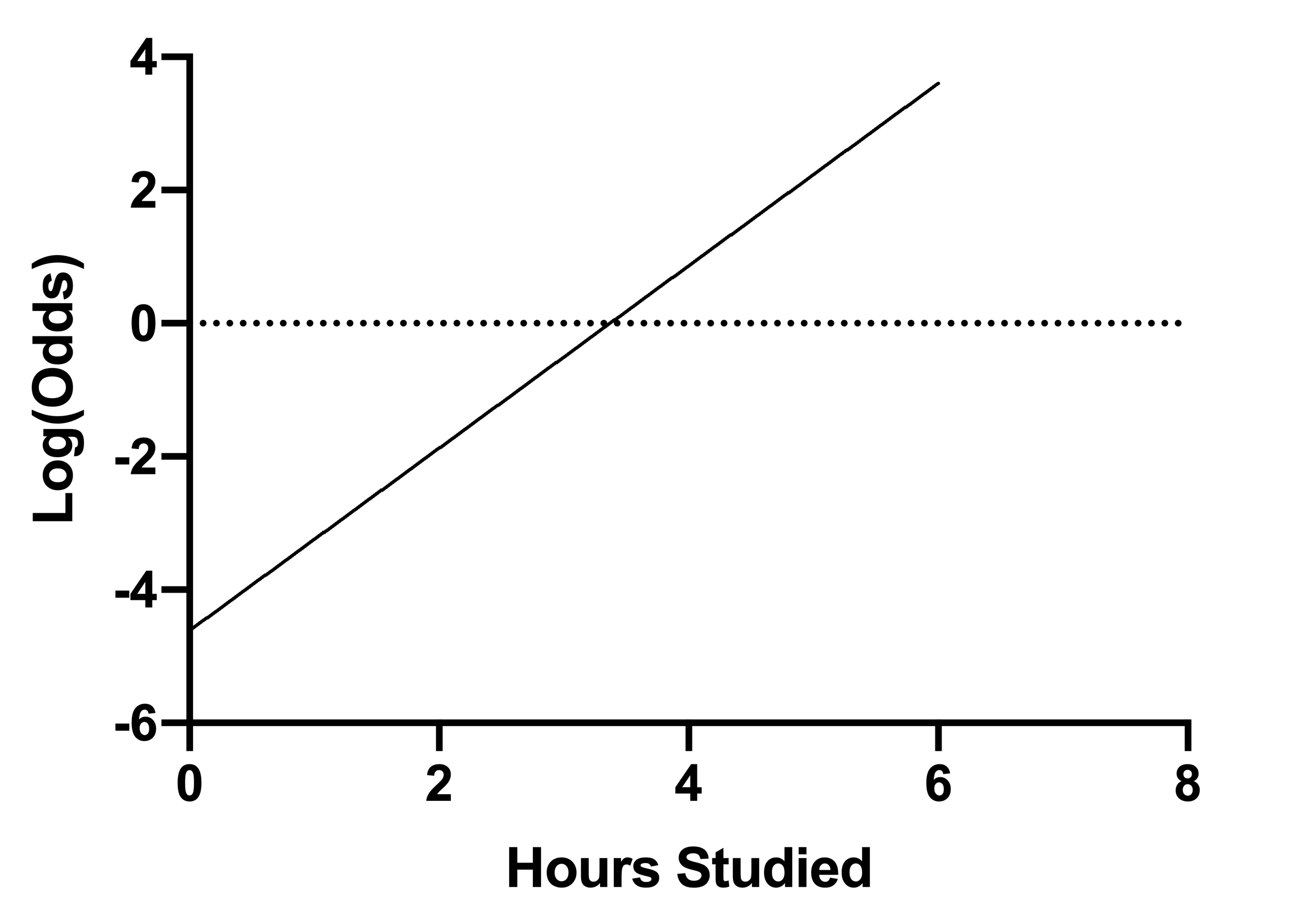

Logistic Regression Computing For The Social Sciences

Logistic Regression In R Nicholas M Michalak

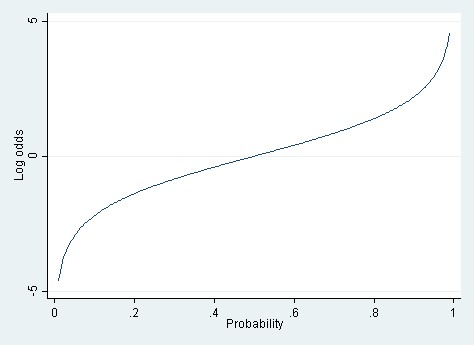

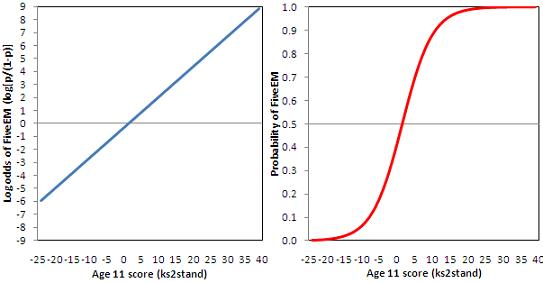

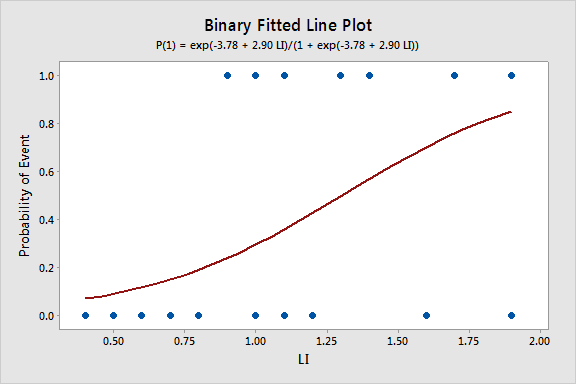

The above formula to logits to probabilities, exp (logit)/ (1exp (logit)), may not have any meaning This formula is normally used to convert odds to probabilities However, in logistic regression an odds ratio is more like a ratio between two odds values (which happen to already be ratios)At LI=08, the estimated odds of leukemia remission is exp{−∗08} =0232 exp { − 2726 ∗ 08 } = 0232 The resulting odds ratio is 0310 0232 =1336 0310 0232 = 1336, which is the ratio of the odds of remission when LI=09 compared to the odds when L1=08Logistic regression is a linear model for the log(odds) This works because the log(odds) can take any positive or negative number, so a linear model won't lead to impossible predictions We can do a linear model for the probability, a linear probability model, but that can lead to impossible predictions as a probability must remain between 0 and 1

Ordered Logit Wikipedia

Logit Of Logistic Regression Understanding The Fundamentals By Saptashwa Bhattacharyya Towards Data Science

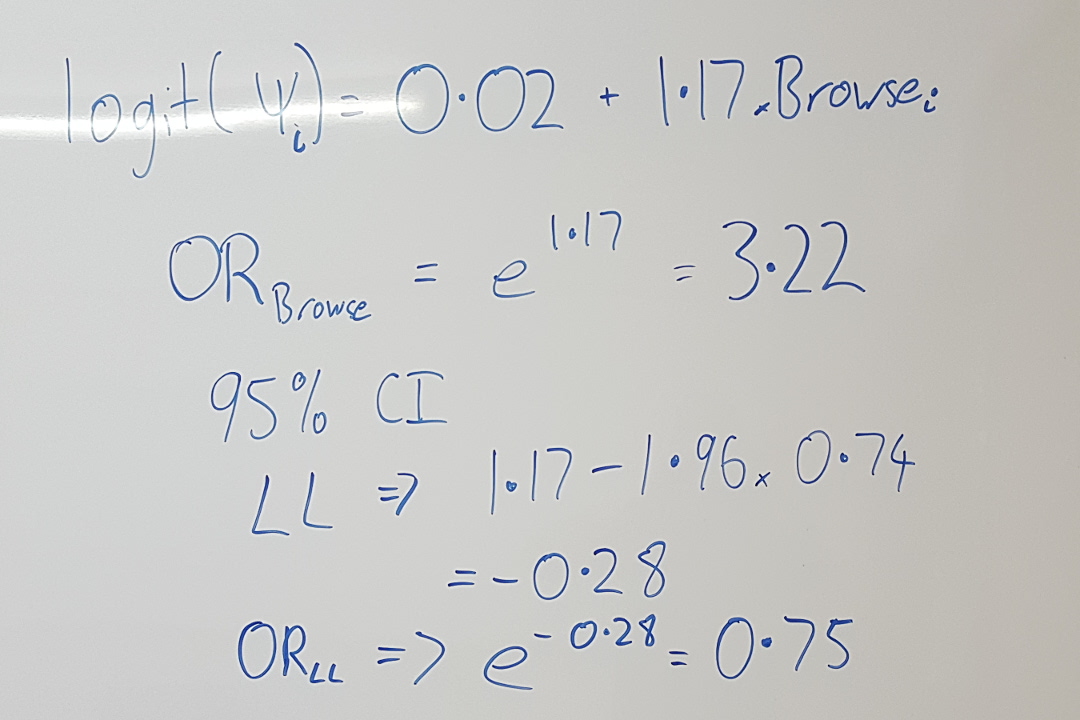

Definition of the logistic function An explanation of logistic regression can begin with an explanation of the standard logistic functionThe logistic function is a sigmoid function, which takes any real input , and outputs a value between zero and one For the logit, this is interpreted as taking input logodds and having output probabilityThe standard logistic function → (,) is• The logistic regression estimate of the 'common odds ratio' between X and Y given W is exp(βˆ) • A test for conditional independence H0 β = 0 can be performed using the likelihood ratio, the WALD statistic, and the SCORE Lecture 15 (Part 1) Logistic Regression &Odds ratios and logistic regression When a logistic regression is calculated, the regression coefficient (b1) is the estimated increase in the log odds of the outcome per unit increase in the value of the exposure In other words, the exponential function of the regression coefficient (e b1) is the odds ratio associated with a oneunit increase in the exposure

What And Why Of Log Odds What Are Log Odds And Why Are They By Piyush Agarwal Towards Data Science

1

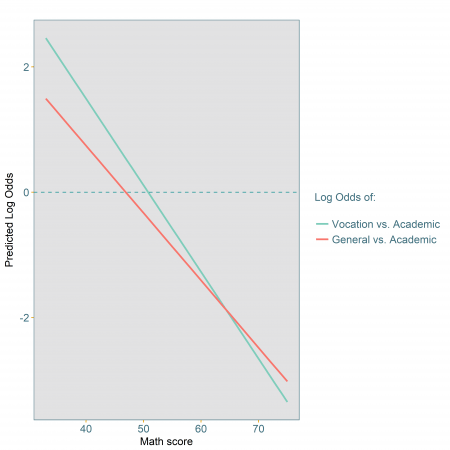

So a logit is a log of odds and odds are a function of P, the probability of a 1 In logistic regression, we find logit (P) = a bX, Which is assumed to be linear, that is, the log odds (logit) is assumed to be linearly related to X, our IV So there's an ordinary regression hidden in thereProbability vs Odds vs Log Odds All these concepts essentially represent the same measure but in different ways In the case of logistic regression, log odds is used We will see the reason why log odds is preferred in logistic regression algorithm Probability of 0,5 means that there is an equal chance for the email to be spam or not spamBaseline multinomial logistic regression but use the order to interpret and report odds ratios They differ in terms of The log cumulative odds ratio is proportional to the difference (distance) We can compute the probability of being in category j by taking differences between the cumulative probabilities P(Y =j)=P(Y ≤j)−P(Y

Logistic Regression Estimates Odds Ratios Of The Probability Of Download Table

1

From sklearnmetrics import accuracy_score def make_predictions(X, thetas) X = Xcopy() predictions = for x_row in X log_odds = sum(thetas * x_row4) pred_proba = sigmoid(log_odds) # if probability >= 05, predicted class is 1 predictionsappend(1 if pred_proba >= 05 else 0) return predictions y_train = X_train, 1 y_predict = make_predictions(X_train,Odds vs Probability Probability는 모든 가능한 event에 대비 , 관심있는 event의 likelihood이다 logistic regression에서 Odds ratio는 categorical Y가 일어날 가능성(likelihood)에 대해, X의 constant effect 를 나타낸다Marginal Effects vs Odds Ratios Models of binary dependent variables often are estimated using logistic regression or probit models, but the estimated coefficients (or exponentiated coefficients expressed as odds ratios) are often difficult to interpret from a practical standpoint Empirical economic research often reports 'marginal effects

Presenting The Results Of A Multinomial Logistic Regression Model Odds Or Probabilities Select Statistical Consultants

What Is Predicted Probability Magoosh Statistics Blog

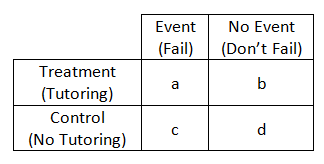

P is the probability that the event Y occurs, p(Y=1) p/(1p) is the odds ratio lnp/(1p) is the log odds ratio, or logit all other components of the model are the same The logistic regression model is simply a nonlinear transformation of the linear regressionOdds and probabilities are buildings stones of the logistic regression Logistic regression would allow you to study the influence of anything on almost anything else Moreover, it would introduce you to one of the most used techniques in machine learning classificationIn statistics, an odds ratio tells us the ratio of the odds of an event occurring in a treatment group to the odds of an event occurring in a control group Odds ratios appear most often in logistic regression, which is a method we use to fit a regression model that has one or more predictor variables and a binary response variable An adjusted odds ratio is an odds ratio

Faq How Do I Interpret Odds Ratios In Logistic Regression

Odds Ratio The Odds Ratio Is Used To Find The By Analyttica Datalab Medium

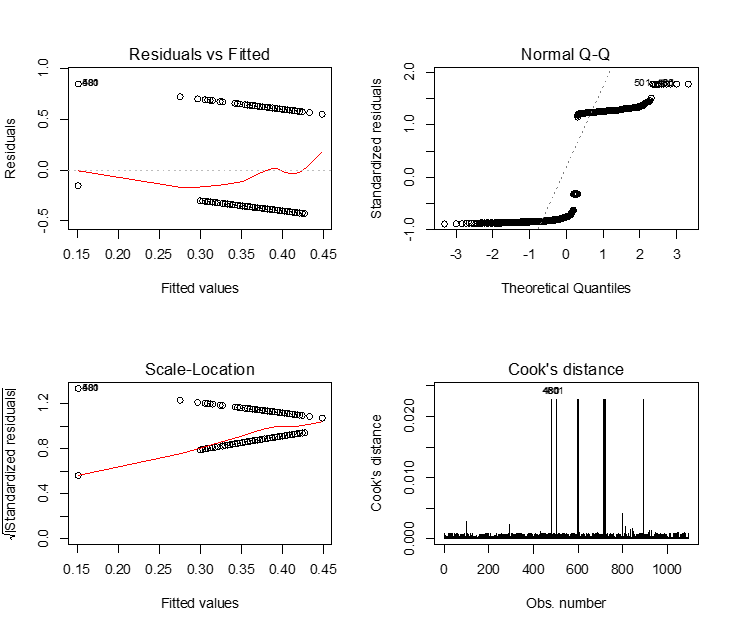

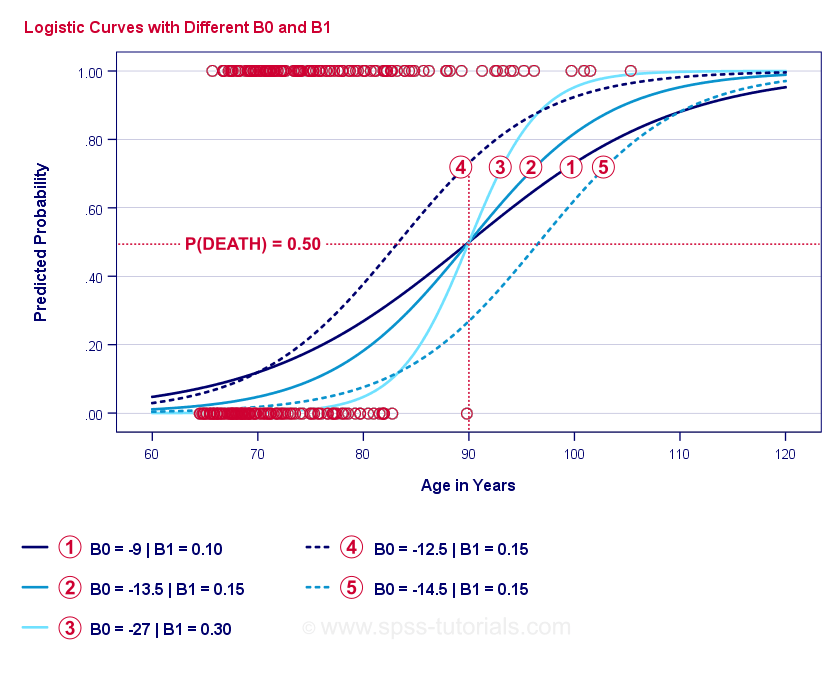

Interpretation of logistic regression The fitted coefficient \(\hat{\beta}_1\) from the medical school logistic regression model is 545 The exponential of this is Donald's GPA is 29, and thus the model predicts that the probability of him getting into medical school is 326%The interpretation of the weights in logistic regression differs from the interpretation of the weights in linear regression, since the outcome in logistic regression is a probability between 0 and 1 The weights do not influence the probability linearly any longer The weighted sum is transformed by the logistic function to a probabilityThe logistic regression coefficient β associated with a predictor X is the expected change in log odds of having the outcome per unit change in X So increasing the predictor by 1 unit (or going from 1 level to the next) multiplies the odds of having the outcome by eβ Suppose we want to study the effect of Smoking on the 10year risk of

Faq How Do I Interpret Odds Ratios In Logistic Regression

Logit Wikipedia

Common Odds Ratios –We can also transform the log of the odds back to a probability p = exp()/(1exp()) = 245, if we like Logistic regression with a single dichotomous predictor variables Now let's go one step further by adding a binary predictor variable, female, to the model Writing it in an equation, the model describes the following linear relationshipOdds vs Probability Before diving into the nitty gritty of Logistic Regression, it's important that we understand the difference between probability and odds Odds are calculated by taking the number of events where something happened and dividing by the number events where that same something didn't happen

Graphpad Prism 9 Curve Fitting Guide Interpreting The Coefficients Of Logistic Regression

Logistic Regression

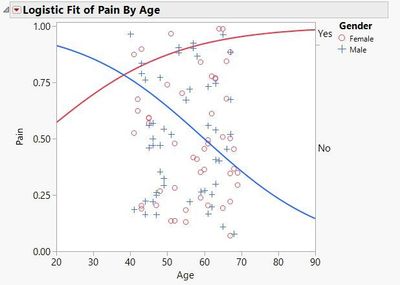

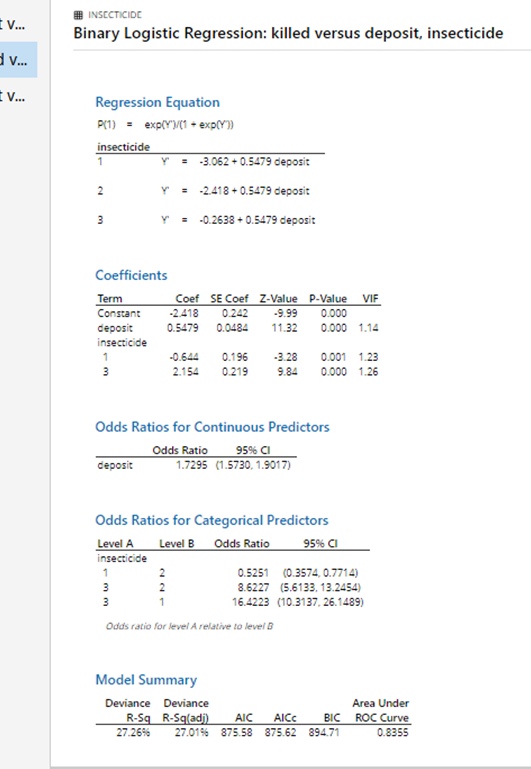

Logistic (or Logit) regression can be used to investigate outcomes that are binomial or categorical (Mortality vs Survival, Complication vs None etc) This can generate a mathematical equation that incorporates these parameters as coefficients to predict probability of the outcome – the regression equationAnswer Simple words Okay I'll use simple words, expect for maybe some special words that people who use logistic regression need to know I'll try to explain what those words mean Let's say I'm a doctor, and I want to know if someone is at risk ofWe will investigate ways of dealing with these in the binary logistic regression setting here There is some discussion of the nominal and ordinal logistic regression settings in Section 152 The multiple binary logistic regression model is the following π

What And Why Of Log Odds What Are Log Odds And Why Are They By Piyush Agarwal Towards Data Science

Logistic Regression Odds Ratio

Let Q equal the probability a female is admitted Odds males are admitted odds(M) = P/(1P) = 7/3 = 233 Odds females are admitted odds(F) = Q/(1Q) = 3/7 = 043 The odds ratio for male vs female admits is then odds(M)/odds(F) = 233/043 =Different methods of representing results of a multivariate logistic analysis (a) As a table showing regression coefficients and significance levels, (b) as an equation for log (odds) containing regression coefficients for each variable, and (c) as an equation for odds using coefficients (or antiloge) of regression coefficients (which• Ordinal logistic regression (Cumulative logit modeling) • Proportion odds assumption • Multinomial logistic regression • Independence of irrelevant alternatives, Discrete choice models Although there are some differences in terms of interpretation of parameter estimates, the essential ideas are similar to binomial logistic regression

Why Saying A One Unit Increase Doesn T Work In Logistic Regression Learn By Marketing

How To Interpret Logistic Regression Coefficients Displayr

The closed form solutions in OLS regression, logistic regression coefficients are estimated iteratively (SAS Institute Inc 19) The individual y values are assumed to be Bernoulli trials with a probability of success given by the predicted probability from the logistic model The logistic regression model predicts logit values

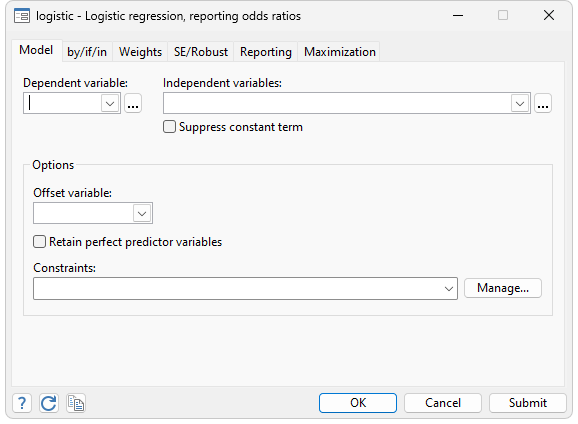

Logistic Regression Stata

Logistic Regression Data Vedas

Opposite Results In Ordinal Logistic Regression Solving A Statistical Mystery The Analysis Factor

Linear Vs Logistic Probability Models Which Is Better And When Statistical Horizons

Linear To Logistic Regression Explained Step By Step Velocity Business Solutions Limited

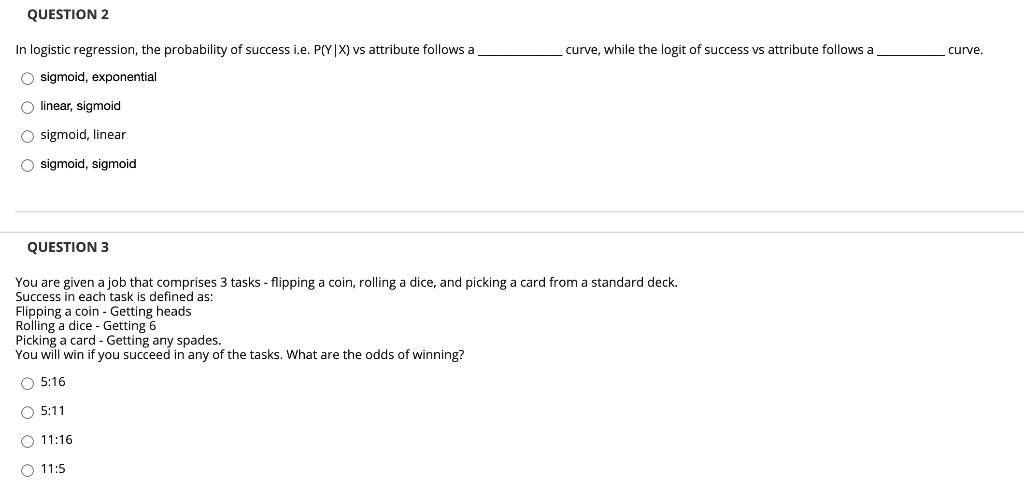

Solved Question 2 In Logistic Regression The Probability Of Chegg Com

Conduct And Interpret A Multinomial Logistic Regression Statistics Solutions

Obtaining And Interpreting Odds Ratios For Interaction Terms In Jmp

How To Interpret The Weights In Logistic Regression By Mubarak Bajwa Medium

Logistic Regression

Logistic Regression Binary Dependent Variable Pass Fail Odds Ratio P 1 P Eg 1 9 Means 1 Time In 10 Pass 9 Times Fail Log Odds Ratio Y Ln P 1 P Ppt Download

9 2 Binary Logistic Regression R For Health Data Science

4 5 Interpreting Logistic Equations

Log Odds Interpretation Of Logistic Regression Youtube

Logistic Regression Calculating A Probability

Logistic Regression In Sports Research

4 4 The Logistic Regression Model

Logistic Regression With Stata Chapter 1 Introduction To Logistic Regression With Stata

Logistic Regression

Simplifying Logistic Regression Dzone Ai

Logistic Regression Circulation

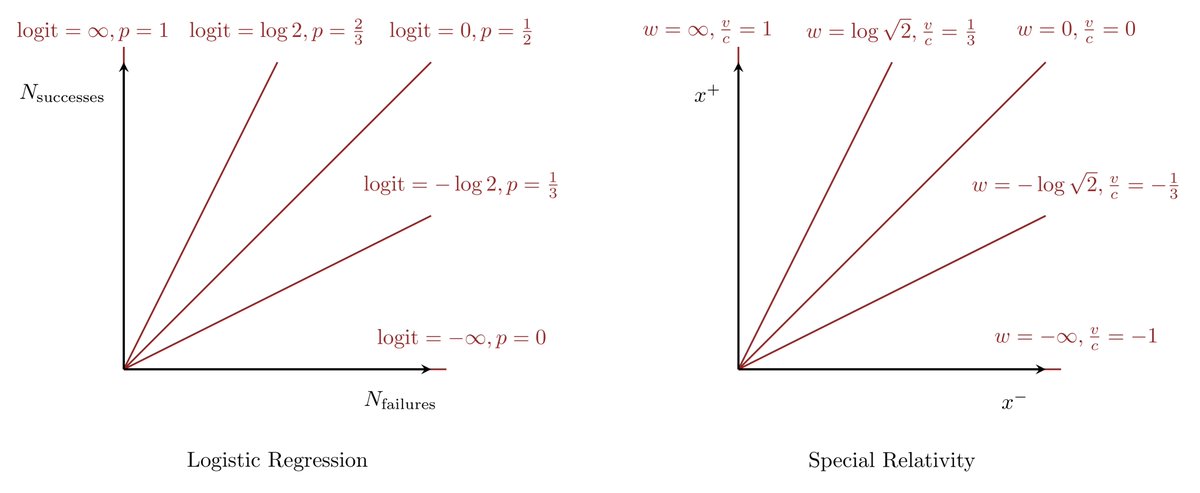

Mathfrak Michael Shapes Dude Betancourt Friendly Reminder That From A Math Perspective Probabilities In Logistic Regression Are Almost Exactly The Same As Velocities In Special Relativity If You Understand Log Odds

Graphpad Prism 9 Curve Fitting Guide Interpreting The Coefficients Of Logistic Regression

Logistic Regression Odds And Log Odds Pattern For Equidistant Observations By Shambhu Gupta Medium

Estimated Odds Ratios From Logistic Regression Models Of The Download Table

9 2 Binary Logistic Regression R For Health Data Science

Role Of Log Odds In Logistic Regression Geeksforgeeks

The Difference Between Relative Risk And Odds Ratios The Analysis Factor

12 1 Logistic Regression Stat 462

Odds

Keep Calm And Learn Multilevel Logistic Modeling A Simplified Three Step Procedure Using Stata R Mplus And Spss

Logistic Regression Single And Multiple Overview Defined A Model For Predicting One Variable From Other Variable S Variables Iv S Is Continuous Categorical Ppt Download

Simplifying Logistic Regression Dzone Ai

Interpreting Logistic Models R Bloggers

Logistic Regression

Logistic Probability Score The Logistic Probability Score Function By Analyttica Datalab Medium

Cureus What S The Risk Differentiating Risk Ratios Odds Ratios And Hazard Ratios

Logistic Regression

Logistic Regression Essentials In R Articles Sthda

Gr S Website

Advantages And Disadvantages Of Logistic Regression Geeksforgeeks

Logistic Regression 1 Sociology 11 Lecture 4 Copyright

What Are Alternatives To Logistic Regression Quora

Log Odds Definition And Worked Statistics Problems

Proc Logistic And Logistic Regression Models

Probability Calculation Using Logistic Regression

What And Why Of Log Odds What Are Log Odds And Why Are They By Piyush Agarwal Towards Data Science

Odds And Log Odds Clearly Explained Youtube

Ctspedia Ctspedia Oddsterm

How To Perform Ordinal Logistic Regression In R R Bloggers

R Calculate And Interpret Odds Ratio In Logistic Regression Stack Overflow

Logistic Regression Model Analysis Visualization And Prediction Regenerative

25 1 Link Functions Just Enough R

Logistic Regression

Odds

How To Calculate Odds Ratios From Logistic Regression Coefficients Proteus

Odds And Log Odds Clearly Explained Youtube

Simple Logistic Regression

Probability Calculation Using Logistic Regression

Logit Of Logistic Regression Understanding The Fundamentals By Saptashwa Bhattacharyya Towards Data Science

Ii Binary Logistic Regression Insecticides Xlsx 3 Chegg Com

Logistic Regression 1 From Odds To Probability Dr Yury Zablotski

Visualizing The Effects Of Logistic Regression University Of Virginia Library Research Data Services Sciences

Logistic Regression In Python Real Python

9 2 Binary Logistic Regression R For Health Data Science

Simple Logistic Regression

Graphpad Prism 9 Curve Fitting Guide Example Simple Logistic Regression

Logistic Regression Wikipedia

Course Notes For Is 64 Statistics And Predictive Analytics

Nhanes Tutorials Module 10 Logistic Regression

Logit Of Logistic Regression Understanding The Fundamentals By Saptashwa Bhattacharyya Towards Data Science

Why Saying A One Unit Increase Doesn T Work In Logistic Regression Learn By Marketing

Logistic Regression Logistic Regression Binary Response Variable And

Logistic Regression Calculating A Probability

How To Go About Interpreting Regression Cofficients

Proportional Odds Logistic Regression On Laef The Probability Of Download Scientific Diagram

Multiple Logistic Regression Analysis

Logistic Regression The Ultimate Beginners Guide

3 Logistic Regression Logit Transformation In Detail Youtube

Understanding Logistic Regression Worldsupporter

Logistic Regression Analysis An Overview Sciencedirect Topics

Use And Interpret Logistic Regression In Spss